Command-driven trajectory planning and following for a self-driving car in a simulated environment

CARLA simulator vision-based supervised

My master's thesis about fully autonomous agent that includes perception, planning and control

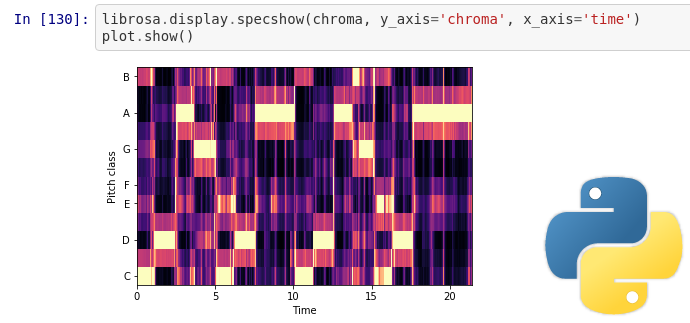

My agent is able to navigate from A to B with respect to human input, like: turn left, right, keep lane, go straight (GPS-like navigation). The only input given for the planning module (neural network) is: 224x224 RGB image + command. Output is a ~15m trajectory ahead of agent's car. Most important thing to note is that predicted trajectory does not says "how to drive" but "where is the legal path that should be followed". Besides planning, agent can detect and react to traffic lights (simple perception module made with TensorFlow Object Detection API). Proposed method of Reference Trajectory Approximation allows for better visual understanding how agent percives the environment in the long run. Although the internals of this system are not that much compilcated as it may seem (in comparison with real-world systems), but it took a very long time to do research, gather data, implement and evaluate. More videos + extras (MLinPL): link Feel free to contact me directly if we have common research interests or my work just caught your eye 😉.

Poster presented at ML in PL 2019

Thesis PDF (PL)

Video summary for EEML 2020 (ENG)